Robots and remote control

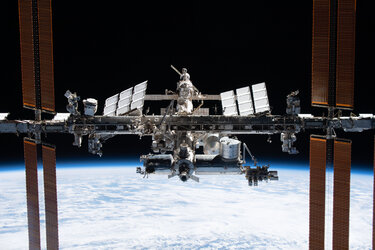

The Proxima mission saw ESA astronaut Thomas Pesquet continue pioneering work to control robots and hardware from the International Space Station.

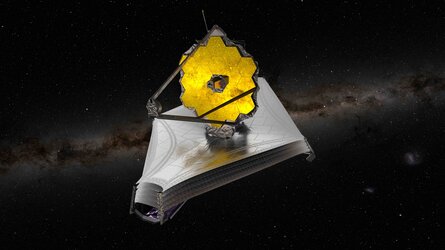

Space exploration will most likely involve sending robotic explorers to ‘test the waters’ on uncharted planets before sending humans to land and ESA is preparing for that future.

Controlling a rover on Mars is a real headache for mission controllers because commands can take an average of 14 minutes to reach the Red Planet.

A project called Meteron is developing the tools to control robots on distant planets while astronauts orbit above. This includes developing a robust space-internet, designing the software to control the robots and developing the interface hardware.

Haptics-1

Haptics-1 is an experiment that fits in the vision of astronauts controlling planetary explorers from orbit. Ideally, astronauts circling a planet would have as much feedback as possible to help control the robots exploring below them. An important aspect of this is ‘haptic’ feedback – transferring touch and vibrations. Most people can tie their shoelaces with their eyes closed, but try doing it when your hands are numb.

This is because your brain processes the feeling of touch and takes that into account when handling objects. Generally, a soft, fragile object is handled with more care than a hard one, and by judging how the object feels you automatically adjust your grip.

Haptics-1 is looking at developing robots that transmit touch information to the astronaut, but until now nobody has checked to see how people in space respond to force feedback. Will astronauts feel and react the same as on Earth to generated vibrations? How will the feedback feel in space, where the feedback joystick has to be strapped to their bodies to prevent them floating away?

A simple joystick can move left or right. Behind the scenes, intricate servo-motors provides counterforce or vibrations. Thomas used the joystick to test the limits of feeling in experiments similar to the classic game Pong.

Haptics-2

Haptics-2 is an extension of Haptics-1 by connecting the force-feedback joystick on the International Space Station to a similar model on Earth – live. As Thomas moved the Haptics joystick an operator on Earth felt the same forces as Thomas, very similar to shaking each other’s hand – only 1000s of km away.

The first-ever demonstration of space-to-ground remote control with live video and force feedback was performed with NASA astronaut Terry Virts orbiting Earth on the International Space Station as he shook hands with ESA telerobotics specialist André Schiele in the Netherlands in 2015.

Thomas continued to test the joystick that allows astronauts in space to ‘feel’ objects from hundreds of kilometres away.

Each signal from Earth to Thomas had to travel from the International Space Station to another satellite some 36 000 km above Earth, through Houston mission control in USA and across the Atlantic Ocean to ESA’s ESTEC technical centre in the Netherlands, taking up to 0.8 seconds in total both ways.

As the Space Station travels at 28 800 km/h, the time for each signal to reach its destination changes continuously, but the system automatically adjusts to varying time delays.

The system’s adaptability and robust design means it can be used over normal data cell-phone networks. This makes it well suited for remote areas that are difficult to access or when disasters have destroyed other communication networks.

The direct and sensitive feedback coupled with safeguards against excessive forces would allow rovers and robots to carry out delicate operations in the extreme conditions found in offshore drilling and nuclear reactors, for example. It could even help to provide humanitarian aid after earthquakes or other natural disasters.

Germany

Germany

Austria

Austria

Belgium

Belgium

Denmark

Denmark

Spain

Spain

Estonia

Estonia

Finland

Finland

France

France

Greece

Greece

Hungary

Hungary

Ireland

Ireland

Italy

Italy

Luxembourg

Luxembourg

Norway

Norway

The Netherlands

The Netherlands

Poland

Poland

Portugal

Portugal

Czechia

Czechia

Romania

Romania

United Kingdom

United Kingdom

Slovenia

Slovenia

Sweden

Sweden

Switzerland

Switzerland

Thomas Pesquet on Facebook

Thomas Pesquet on Facebook Thomas Pesquet on Instagram

Thomas Pesquet on Instagram Thomas Pesquet on YouTube

Thomas Pesquet on YouTube