Asteroid landing systems given trial by software

On screen, the cratered alien landscape hurtles ever closer – putting asteroid or planetary touchdown techniques through the most realistic simulation possible, short of actual spaceflight. A new generation of high-performance software is enabling real-time testing of both landing algorithms and hardware.

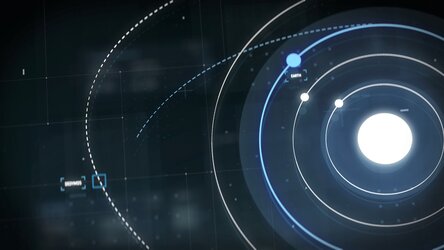

‘Entry, descent and landing’ on a planetary body is an extremely risky move: decelerating from orbital velocities of multiple km per second down to zero, at just the right moment to settle softly on an unknown surface, while avoiding craters, boulders and other unpredictable hazards.

In the case of low-gravity comets or asteroids, the experience of Rosetta’s Philae lander in 2014 shows there is a real danger of actually bouncing off the surface back into space. The Moon’s stronger gravity makes crashing more likely, as is also true for higher-mass Mars – coupled with the added complication of its thin but non-negligible atmosphere.

But practice makes perfect: that is the thinking behind ESA’s new generation ‘Planetary and Asteroid Natural scene Generation Utility’ or Pangu software, developed for the Agency by the University of Dundee in Scotland.

Access the video

“The software can now generate realistic images of planets and asteroids on a real-time basis, as if approaching a landing site during an actual mission,” explains guidance, navigation and control engineer Manuel Sanchez Gestido.

“New images are generated every tenth of a second, with no rendering delay. What this means is we can test landing algorithms, or dedicated microprocessors or entire landing cameras or other hardware ‘in the loop’ – plugged directly into the simulation – or run thousands of simulations one after the other on a ‘Monte Carlo’ basis, to test all eventualities.”

Pangu has been under continuous development since it was first created in the late 1990s, initially for lunar landing projects.

“Back then it was wireframe imagery, and images were generated in slow motion – an entire landing would have taken hours to render,” adds Manuel.

“But we’ve taken advantage of general improvements in computing capabilities to bring its performance up to real-time, and to make the graphics as realistic as possible.

“That’s important because many modern navigation and landing algorithms work on the basis of visual detection and mapping of surface features.”

At high altitude, some features cannot be resolved, but smaller details are revealed as the simulated lander comes closer and the field of view shrinks, keeping the software’s computational needs roughly constant.

Generation of a target body begins with a digital model acquired from past observations to create a polygon mesh, around which a textured surface is draped, with colours based on observed reflectivity properties. Craters and boulders can be added based on known distribution values for the destination in question.

In a further test of Pangu’s fidelity, Manuel recounts that past landings or close encounters can be recreated precisely from recorded telemetry: “We’ve rerun the landing of the Curiosity rover on Mars, for instance, and Japan’s Hayabusa spacecraft approaching the Itokawa asteroid, and putting them next to each other it was hard to tell which one was real and which was simulated.”

As a next step, ESA’s Guidance, Navigation and Control section is working on a comparable software system for simulating close approach to satellites for proposed servicing and debris removal spacecraft, starting with the Agency’s 2023 e.Deorbit mission.

Germany

Germany

Austria

Austria

Belgium

Belgium

Denmark

Denmark

Spain

Spain

Estonia

Estonia

Finland

Finland

France

France

Greece

Greece

Hungary

Hungary

Ireland

Ireland

Italy

Italy

Luxembourg

Luxembourg

Norway

Norway

The Netherlands

The Netherlands

Poland

Poland

Portugal

Portugal

Czechia

Czechia

Romania

Romania

United Kingdom

United Kingdom

Slovenia

Slovenia

Sweden

Sweden

Switzerland

Switzerland